Revista Música Hodie, Goiânia - V.12, 302p., n.2, 2012

Studying the Computing Implementation of Interactive Musical Notation

José Fornari (UNICAMP, Campinas, SP, Brasil)

tutifornari@gmail.com

Abstract: Formalized music is usually based on the asynchronous creation of musical structures by the composer, that are later expressed in the form of music notation. This can also be considered as an algorithmic structure that, once executed by the musical performer, reaches the state of sonic art; the music per se, formed by the organization of sounds along time. This is perceived by the listener whose cognition attributes to it a personal meaning. This article introduces a computational process that aims to artistically explore the inversion of its natural order, respectively given by: the composer, the performer and the listener. Throughout a computational model, listener perception data is retrieved and used to control the dynamic creation of musical notation in real-time. After this process is over, the final result is a structured musical notation, generated by a self-organizing process given by the systemic interaction of independent agents, that are: the computer, the composer, the performer and the listeners. Keywords: Musical notation; Computer music; Interactive music.

Um Estudo sobre a Implementação Computacional de Processos de Notação Musical Interativa

Resumo: A música formal normalmente fundamenta-se na criação assíncrona de uma estrutura musical pelo compositor, amalgamada na forma de notação musical. Esta pode ser vista como um algoritmo que, uma vez executado pelo intérprete, adquire o status de imaterialidade da arte sonora, ou seja, a música de fato, formada pela imaterial organização de sons ao longo do tempo. Estes são percebidos pelo ouvinte que atribui a tal estrutura um significado em particular. Este artigo introduz um processo computacional que pretende explorar artisticamente a inversão da ordem natural de criação artística: compositor, intérprete, ouvinte. Através de modelos computacionais, determinados dados da percepção do ouvinte são coletados, e/ou da interpretação do músico. Estes controlam a criação dinâmica de uma notação musical interativa. O resultado é alcançado no final da performance, quando tem-se uma partitura musical formal, criada por um processo auto-organizacional advindo da interação sistêmica entre os agentes: computador, compositor, intérprete e ouvinte. Palavras-chave: Notação musical; Música computacional; Música interativa.

Lately, human interaction with, and throughout, computers is becoming increasingly ubiquitous. Interact with computers is now part of the daily routine of a growing number of people from all walks of lives and parts of the world. Memory and processing capacity of machines, according to Moore’s Law, has been steadily doubling every 18 months, since the middle of the twentieth century (Moore, 1965). As known, computers can also be used for musical purposes, operating as independent processing units, or interconnected in a social network. Portable machines (i.e. laptops, smart-phones, etc.) can now carry out processes of musical analysis, sound synthesis and sound processing, generating sounds of great complexity, in terms of parametric control of their informational richness and perceptual relevance. This same technology also allows the recording and retrieval of data from human bodly movements; gestures and expressions, captured by a diversity of sensors, such as accelerometers, gyroscopes, cameras and microphones (Kim, 2007). Several types of data acquisition interfaces of body movements are labeled as: Gesture Interfaces (Triesch, 1998). They allow a wide range of dynamic interaction and real-time control of artistic computational processes, fostering and encouraging the communication between humans and machines. The fundamental step in order to provide such artistic interactivity was achieved together with the surprisingly increased capacity of processing power and memory of machines, which also helped the design and built of gestural interfaces capable of acquiring data in real-time, by motion sensors that can detect subtle movements of the artistic performance, and transmit this data in real-time and wirelessly. In this scenario, technology provides the means to allow the design of virtual musical instruments, as well

Revista Música Hodie, Goiânia - V.12, 302p., n.2, 2012 Recebido em: 00/00/2012 - Aprovado em: 00/00/2012

as the creation of adaptable computational methods for the analysis, processing and synthesis of sounds (Fornari, 2011).

The dynamic processes of analysis, processing and sound synthesis in computer music can occur through the manipulation of two data categories: 1) Acoustic and 2) Symbolic. Acoustic data are directly related to the representation of waveforms compounded by quasi-periodic successions of pressure variations (compressions and expansions) in an elastic medium (normally the air), that describes our sonic reality. Since the dawn of digital audio, in the early 90s (with the marketing spread of Compact Disks, or CDs) the term “audio” has become synonym of “digital audio” formed by huge arrays of integer numbers, named points (in CD standards, 44100 points per second, for each channel), calculated by sampling the acoustic signal in regular periods of time (the sampling rate) and intensity (bit resolution). This process reduces sound representation to a time series, which greatly facilitates sound analysis, processing and synthesis in computer models. Symbolic data, on the other hand, conveys the structure of controlling parameters of a sonic or musical process, instead of conveying any actual audio data. These can be defined by controlling protocols, such as: Music Notation, MIDI (Musical Instrument Digital Interface), or, the more recent OSC (Open Sound Control). Symbolic data give a map to the sound generation, but does not carry any actual sound information. Data calculated by computer models that correspond to musical descriptors (acoustic or symbolic) are called predictions, and their intention is to emulate the human ability of perception, cognition and affection evoked by some aspects of music. Thus, one could use the predictions of musical descriptors to control the parameters of a computational process for the automatic creation of music notation. This must necessarily be a computational model of one of the following categories: 1) Deterministic (or Formal), 2) Stochastic (or Statistical), 3) Adaptive (or Evolutive). Deterministic models uses formal mathematical methods to model a phenomenon and thus predicting their behavior, or output data. Here we compare deterministic models with traditional music notation, where a composer creates a fixed and unique structure, in the form of a score, to be later decoded (interpreted) by the musician. Probabilistic models, on the other hand, use statistical methods to predict the likelihood of a particular result, state or behavior, within a range of possible solutions. They are also described as Stochastic models, which implies the random development of these systems output along time. Stochastic processes have been explored by computer music composers such as Xenakis, who gives a minuscious description of his creational method with this type of music composition, in the book: “Formalized Music: Thought and Mathematics in Music” (Xenakis, 1971). Adaptive models have a dynamic structure. They are not based on a fixed set of equations, such as the deterministic models, nor in stochastic methods of predicting a range or likelihood of possible outcomes. Adaptive algorithmic structures can adapt (evolve) along time, allowing they to better fit the dynamic search for the best possible solution for any given problem, in a given moment or period of time. In computer science, it is common to refer to a particular type of solution as a “problem” to be solved. Here we compare these kins of computational models with the following three types of logical reasoning: 1) Deduction, 2) Induction, 3) Abduction (Menzies, 1996). Deduction corresponds to deterministic methods, as they provide unique and specific solutions, or conclusions, for the same problem. Induction is related to stochastic methods. Their type of solution is not restricted to one outcome at a time, but to a range of possibilities expressed as a tendency or percentage for the expected solution of a problem, which ends up describing a rule. Abduction is related to adaptive methods, such as Evolutionary Computation (EC), which automatically recreates new solutions, at any given moment of its execution, describing a hypothesis, or a new model structure for the problem that is being modeled, or just by guiding the dynamic path of an evolutionary process (Oliveira, 2010).

An interactive computational process can be of any type of the ones above described: deterministic, stochastic or adaptive. One reasonable possibility would be to generate compositional material originally mixed (composed of acoustic and symbolic musical material) created by the dynamic interplay between the machine (the adaptive algorithm) with the human user, being this one either a musician performer, a composer or even an artist of another artistic field, such as: a dancer, an actor/actress or a visual artist. The generation of mixed sonic materials brings about new possibilities, such as the exploration of the sight-reading of musical notation generated during performances, considering musicians’ sigh-reading ability being enough developed to allow him/her to easily play the new symbolic data (music notation) to be performed. Under certain aspects, symbolic material created by such system can be seen as originated by formal (notational) performance initially started as an improvisation performed by one musician. This may refer to the cybernetic concept of mutual feedback occurring between agents of this musical process: Human and Machine (Vaidya, 1997).

Creating music through the notational feedback of acoustic material generating symbolic data, between the interaction of human and machine, makes the authorship (and ownership) of this type of musical work less conspicuous. In a first instance, music composed and performed by such system, has at least three creators: 1) The composer of the starting material, which may also be the designer who created the machine algorithmic compositional rules whose system will follow to generate musical notation. 2) The computer model per se – whether it is deterministic, stochastic or adaptive – dynamically creates new musical scores which is not expected or designed by the composer. 3) The performer, which plays the music notation being dynamically generated and thus adds his personal aesthetic concepts, which not only characterizes the performance but also interact with the computational process of music notation generation. However, such system is able to generate a new musical composition (in the form of musical notation) at each musical performance. This would bring musical notation creation closer to musical interpretation, where each new performance is unique. This brings about a new approach of exploring musical possibilities. In the case of the study presented here, each new score generated by each performance would be a new composition in itself, or at least, it should be considered the instantiation of a process in a higher degree of musical creation and controllability; in other words, a Meta-Compositional process. What is known for sure is that, at the end of this process, the achieved result is a musical score generated by the interaction of three agents: Human-Machine-Human, which corresponds to: Composer-Algorithm-Musician. Each agent in the process has its own characteristics, that are expressed during the elaboration of the compositional process, in different variations, degrees and forms. Different from the traditional manner of formal music creation, free improvisation sessions, or even the 20th century computer music, the central figure of the compositor is here shattered, distributed and replaced from one single individual to a dynamic and interactive process of music making.

The actual implementation of a computer model for the automatic synthesis of music notation through the acoustic interactivity between human and machine might necessarily focus on the generation of real-time structures of notation that are simple, unique and consistent. It is noticeable that formal notation of European classical music, from the viewpoint of its graphical computational representation, is constituted by a complex organization of icons, which produce a large and sometimes redundant range of possible combinations, given the need of this system to classify and identify the largest possible range of musical gestures (heights, dynamics, durations, articulations, tempo, expression, moods, etc.) to a broad set of musical instruments. Normally, a well-trained musician can understand it and decode these symbols into music, as far as the notation is in tune with his/her training (for example, a violist may find difficult to read notation written in the clefs of G or F, that are common for pianists. If traditional music notation is, in terms of algorithmic implementation, graphically complex and redundant; they can also be seen as formalized and efficient, for human cognitive terms, and studied by several other researches, in different fields of science. Other forms of musical notation, such as the “Graphic Scores” (GS) are known to allow musicians to experience greater flexibility and freedom of interpretation. If GSs are more simple in terms of their graphical formalization, they are less precise and more dubious to be interpreted than formal western music notation. For this reason, GSs are often used in the practice of free improvisation, instead of aiming to replace or extend the usage in formal musical composition, which is still mostly made out of traditional music notation and given in the form of a fixed and invariant structure. The proper balance between traditional music notation and other forms of musical representation, like GS, together with computational methods of dynamic generation of notation seems to point out to the fact that a new possibility that may allow the effective implementation of a computational process able to invert the natural order of music making, that starts in the composition building, moves through performance and ends up in the listener (corresponding to the musical processes of sensation, appraisal and affection). Once that symbolic music notation can be dynamically generated and controlled by a computer model, this musical structure no longer needs to be as a static architecture that is later decoded by a performer, but that can be created by aspects of its interpretation or listening.

1. Generating music notation in real-time

As mentioned, this article addresses the possibility of dynamically creating musical notation. This session presents a brief overview on the design considerations of computer models capable of generating musical notation in real-time oriented by parameters retrieved by musical descriptors. This aims to provide an initial settlement for the development of processes of music notation dynamically generated through a computer model capable of automatically creating notation in a way that is easy to be played by a performer, which means low computational processing cost for the machine and low cognitive cost for the performer to understand and play it on time. However, at the same time, this model has to be able to express musical concepts with enough precision and depth to let the musical process designer express the musical richness of a meta-composition; the compositional process of generating a musical composition. Its Meta-Composer (the composer of a music composition) is here the individual who programs a computer system that generates musical notation (deterministic, stochastic or adaptive), controlled by musical descriptors (symbolic or acoustic). The meta-composer lays down the compositional rules of a process of generating and automatically searching for compositional models (deductive, inductive or abductive), which in turn automatically creates musical compositions, stratified in the form of a finished graphic structure of a music notation score.

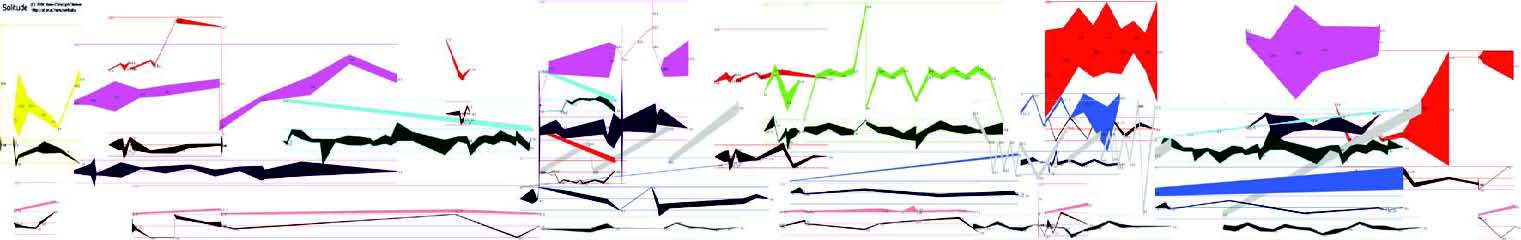

All forms of traditional music notations (including the experimental ones, developed in the 20th century) have in common the fact of having a fixed, static structure, which corresponds to the lacking of a feedback loop, from the compositional structure with musical performance. A good example is the virtual computer notation, developed by Hans-Christoph Steiner in PD (www.puredata.info), which is shown in Figure 1. This one describes in a graphical terms the processing steps of transformations on a pre-recorded audio material (a piano solo). Nevertheless, it lacks the interactivity of such structure with a performer, being it a human musician or a computational algorithm player.

2. Interactive notation

Different from the example above, the model here proposed is an interactive computer system that allows the creation of dynamic virtual musical notation structures, which are formed through the feedback data predicted by musical descriptors over the musical material, initially generated by improvisation. Furthermore, by principle, we intended to develop this computer system in an open source platform, in order to guarantee its free and public access; the same type of access that everyone has to a traditional music notation, which any score can be accessed (and copied) by anyone that was musically trained to read music. Secondly, this model necessarily needs to require little computational effort to be processed, so it is not bounded to need powerful machines, so anyone with a regular laptop can run it. Its design also has to be easy to understand by the users so they can easily interact and control the notation system in real-time. The difference between the system shown in Figure 1 and the one proposed here is that the last one includes notation interactivity, which means the ability to generate notation from the interaction of the computer model with the performer, without the need of synchronizing it note-by-note or phrase-by-phrase, but bound to a rhythmic self-regularity.

The process of generating interactive music notation can use several strategies to conceal some implementation issues in order to guarantee scores generated in real-time. For that, the model necessarily has to be: 1) Consistent (without contradictions or redundancies), 2) Complete (able to express all musical notations for its intended goal), and 3) Cognizable (easy to read, understand and implement). In this context, here is presented a preliminary study for the development of a computer model that is capable of generating such interactive scores. This work investigates a computational implementation throughout rules for generating musical composition. These rules serve as organizational elements of an automatic compositional process of musical human-machine interaction, creating an environment that may explore the concepts of: 1) Interpretation, 2) Improvisation and 3) Composition in real-time. For that, it is defined here a distinction between Improvisation and Composition. Improvisation is here understood as an elaboration of musical ideas in real-time, without the final construction of a formalized registration in a music notation. Composition is strictly based on the principle of musical notation, which is necessarily developed asynchronously, which means; not in real-time. In this state, musical structure is gradually developed in an atemporal built by the composer, similarly to the work of a sculptor, that can go back and forth in different part of the piece until the artist is pleased with the final result.

Unlike other forms of musical notation, interactive notation, as presented here, is a notation that is not fixed, but that may change dynamically over time, especially during the performance that creates it. This can also be adaptive, whose computer system is controlled by acoustic aspects collected by musical descriptors, where the compositional process is mediated by the interaction between performer and computer, through compositional rules determined by the (meta) composer. Thus, the development of a computational model for interactive rating has to take into consideration both the notational aspects of music and the gestural data related to their performance in order to create a dynamic and open system that creates similar but variant notations.

3. Affective parameters

Some aspects of evoked emotions are associated with minute involuntary changes of physiological signals. These are commonly named as Biosignals [Kim 2004]. They can be retrieved from the variation of bodily reactions, such as: 1) Skin resistance (Galvanic Skin Response - GSR), 2) Heart beat, (retrieved by electrocardiogram - ECG, or photoplethysmography - PPG), 3) Respiratory rate, 4) Pupil diameter, and so forth. In terms of the emotion evoked by listening to music, such biosignals can describe both the range of emotional states of short duration (Affects) as the long-term ones (Moods) (Blechman, 1990). According to some scholars in the field of music psychology, Affects are normally related to short chunks of music, of 3 to 5 seconds long. It allows to convey the sense of present moment, or the “now” time in music (James, 1893). Moods are usually evoked by the exposition to longer terms of musical listening, as the average duration of a musical performance, such as a symphony or any usual musical show, that are about one hour along. These emotional effects persist for long periods of time and may influence other biological rhythms, such as the emotional classes expressed by the circadian cycle (Moore, 1982).

The informational content given by the emotion evoked while listening to music and correlated biosignals, permeates and connects three interdependent areas of music: 1) Structure (composition), 2) Appreciation (listening) and 3) Performance. This compounds a system in which music derives as an emergent property of their interaction. Here, this is created by the cooperation between: Computer, Composer and Performer. In systemic terms, this creation can be described as a Self-Organizing process (Kauffman, 1993). This process creates order by an informational flow of regularities emerged from the dynamic aspects of music, and resulting in habits that are identified by the human cognition as the ones constituting its musical meaning.

Usually the notational structure remains unchanged as it occurs in the dynamics of a performance and / or music appreciation. However, the structure also emerged from a self-organized process that took place into the composer’s psychological universe. Such a medium behaves like an open and complex system, which undergoes the action of external agents (here called inspirational factors) that ultimately set the final shape of the structure of a composition, solidified in the form of music notation. However, there can also be cases where the music structure (or its set of rules) are not fixed, but may dynamically change during the performance. Examples of such processes are found in popular music performances; in the contextualized improvisation of jazz; on happenings, free improvisations; and even in algorithmic compositions, such as the famous stochastic process of musical composition known as: the dice game of Mozart (Chuang, 1995).

There are four elements to be considered in the computational implementation of the algorithm of interactive musical notation. They are, the already mentioned: 1) Structure, 2) Appraisal, 3) Performance, and also the 4) Informational flow. This one is formed by the emotional reaction evoked by music. The behavior of this flow is determined both by involuntary data (through their correlation with biosignals, as further described) and the predictions of musical descriptors (corresponding to what is further described as voluntary data). These two types of data can be retrieved not only from musicians but also from listeners, for example, through the acquisition of data from their emotion reactions during performance. In technical terms, this procedure should preferably be non-invasive and wirelessly transmitted. The final result of an auto-organizational process of music making of this sort can be either: 1) in the form of a fixed music notation structure, such as the traditional music sheet, or 2) a virtual structure that remains always dynamic, through the implementation of a graphical process that can even use measurements of voluntary and involuntary data, that compound the informational flow, thus controlling the generational process of a continuous sound, represented by a dynamic virtual music score that is interpreted by the musician at the same time that it is generated by the computer model. This is the type of implementation presented in this study.

4. The computer model

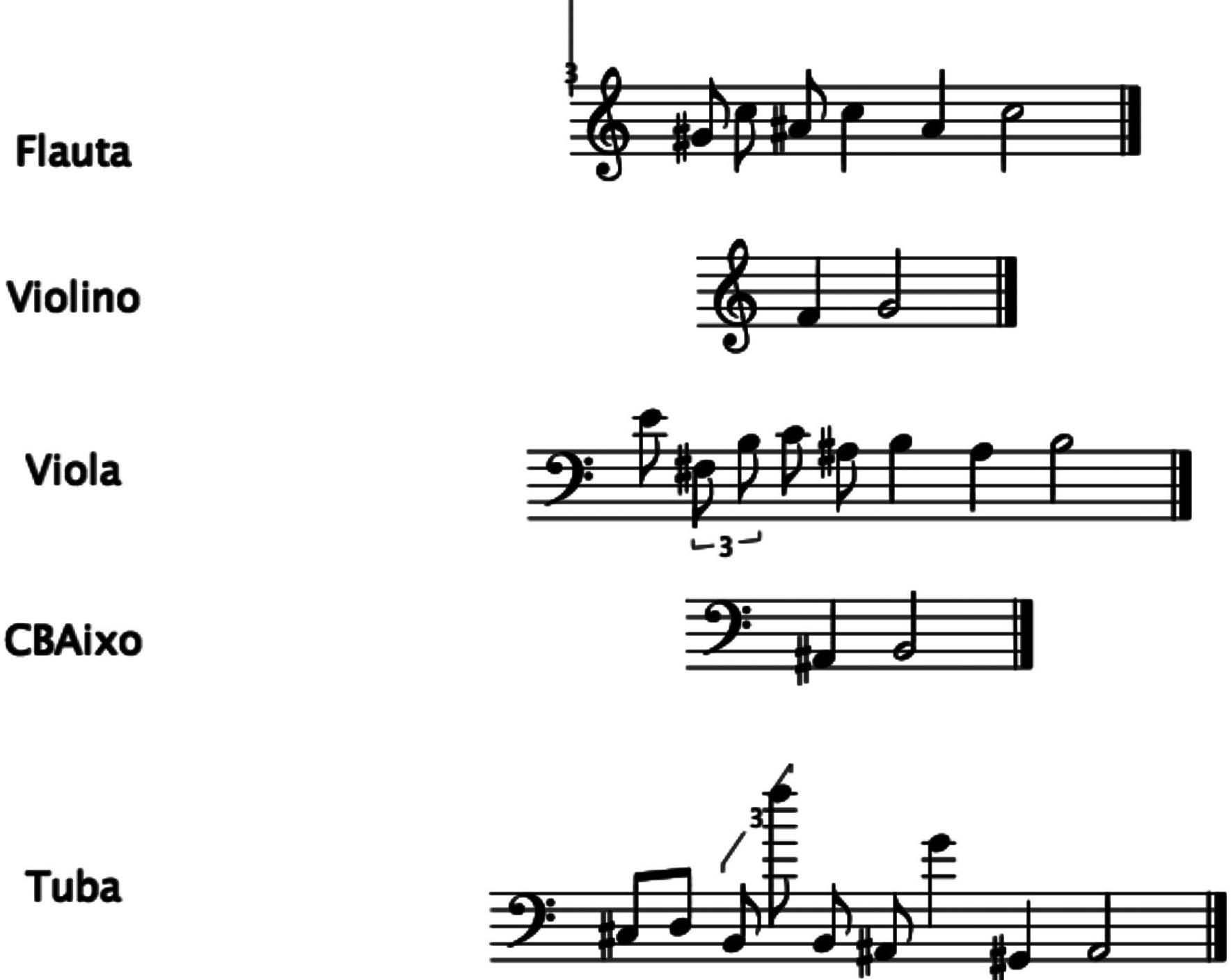

The computational model of the system described here was developed in PD (www. puredata.info), an open-source computer environment for the design of real-time processing of control, audio or video data. The algorithm designed in PD is called: patch. In this work, it was designed an interactive music patch to create musical notation in real-time to be performed by a quintet of musicians, consisting of: Flute, Violin, Viola, Counter-bass and Tuba. For that, this model consists of five groups of subroutines (each one is called: a subpatch). Each subpatch is comprised of two subroutines; one collects data from two psychoacoustic aspects (loudness and pitch) and the other calculates the corresponding note for each specific instrument. Each subpatch performs this process for each one of the five musical instruments used here. Psychoacoustic descriptors are also know as free-of-context, or lower-level descriptors (Bogdanov, 2009). The first music descriptor detects in real-time the most relevant partial components of the sound frequency spectrum, collected by the microphone of the computer, where the patch is running. The frequency of the partial with higher intensity is assigned as the current fundamental frequency of sound, usually related to pitch. This data is later converted to notes in MIDI protocol, whose pitch vary from 0 to 127 – which covers the range of all musical instruments of a symphonic orchestra – and loudness, also from 0 to 127 – which covers all changes of dynamics, from pianissimo to fortissimo. Each instrument has its own note range respected by a compositional rule that limits this parameter. For instance, Tuba pitch range is here considered between C2 and C5, which corresponds to the MIDI notes 36 and 72. For the Counter-bass, the range is between E1 and E4, or 28 to 64, in MIDI notes. For the Viola, notes are: C3 and C6, corresponding to the MIDI notes 48 and 84. For the Violin, these are G3 and G5, or 55 and 79, in MIDI notes. Finally, the Flute has a range considered here between C4 and C6, which is 60 to 84 in MIDI notes. All pitch ranges was determined based on the information given by the musicians who volunteered to participate in this experiment. They considered these regions as being comfortable to be payed, especially in a sight-reading performance, which is the case of this experiment. The 5 most relevant frequency partials detected by the computer model, are converted into notes, which are assigned to be into the regions within the range of each musical instrument, as described above. When a partial frequency represents a note outside the region of these instruments, a simple folding rule is applied that recalculates the pitch to the nearest octave of this note that is within this instrument range, where this note is relocated. Here is also used another basic rule of prioritizing smaller pitch intervals over larger ones, on consecutive notes of the same instrument. This is done in order to enhance the gestalt principle of good continuation (Banerjee, 1994), that help to form melodic lines, for all instruments. The second descriptor calculates the time step of the attack (also known as: onset) of the musical stimulus, as collected by the microphone. This compositional rules is applied, firstly, to calculate the begin of each bar of the virtual notation, seeking to synchronize these five voices so that they are able to be read and played together. Secondly, this rule seeks to calculate rhythmic cells. This is based on the average mean calculated for the last 8 bars, which is displayed in a graphic representation formed by a grid with five staves, where the melodic lines of each instrument is shown, arranged in rhythmic metrics that is not too complex for a professional musician to sight-read, and promote a consistent tempo; a regular metric pattern which is similar in different instruments, or contra-punctual, once that the first 5 highest partials used to create the notes necessarily force the harmony simple, traditionally called “consonant”. This was observed in several moments during the rehearsals of the computer model with the quintet. The next figure shows a moment of the dynamic score created by this virtual music notation. This score varies continuously and endlessly along time, up to the moment where these musical process is still running. The PD patch calculates and transmits via OSC (Open Sound Control) data of their voices, where the music notation here viewed is rendered in another free platform, named: InScore (http://inscore.sourceforge.net/).

Figure 2: Snapshot of the dynamic music score of the interactive music notation.

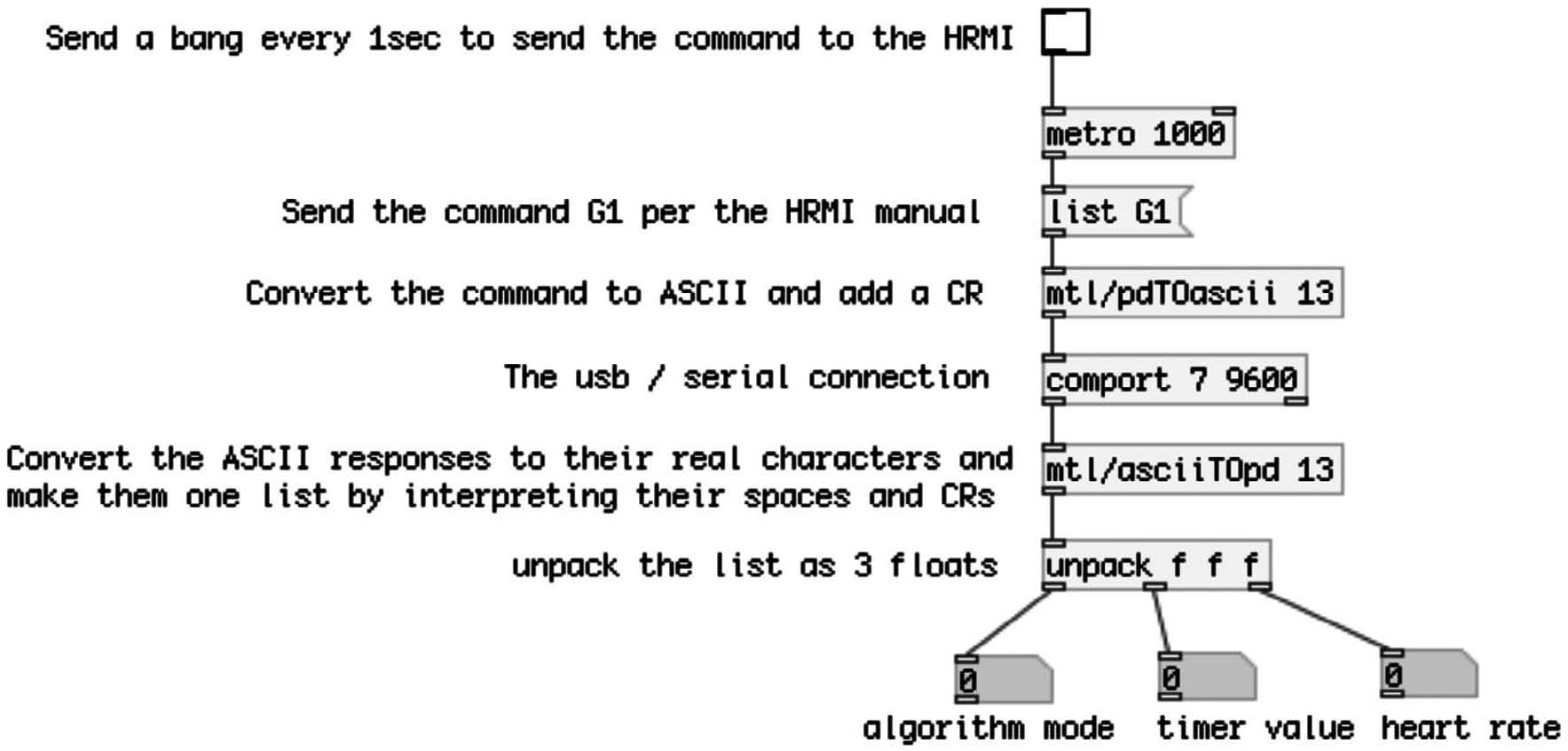

Through the predictions of musical descriptors, data is collected from the psychoacoustic aspects of a musical performance, with different degrees of freedom, starting as free improvisation, and culminating into the formal execution of the score prepared by the dynamic computing model, with data retrieved from sensors. These are gestural data that can be of two categories: 1) Voluntary, 2) Involuntary. Voluntary data are the one related to conscious body movements (movement of arms, legs, torso, head, etc.) that are controlled by the Sympathetic Nervous System (SNS). Voluntary gestural data can be collected with motion sensors such as accelerometers, gyroscopes or tracking location systems. Involuntary data are the autonomous body gestures, controlled by the Parasympathetic Nervous System (PSN) which are normally done unconsciously, such as: heart rate, peristalsis, pupil dilation, sweating, and so forth. The involuntary gestures can be collected by biosignals sensors, as briefly describe before. An interesting feature of involuntary data is the fact that they often have a correspondence with the individual’s emotional state. Thus, involuntary data used to control the computer model of dynamic generation of musical notation can explore the possibility of creating methods to control the automatic generation of musical scores based on the informational flow given by evoked emotions, from which culminates the self-organization of a virtual music score, somewhat correlated with the emotion evoked by the music making process. To this end, we tested a system for heart beat monitoring; the Heart Rate Monitor Interface (HRMI). This is a simple external interface given by a piece of hardware that can be connected to a computer through an USB port, that converts ECG signal collected together with a short range wireless transmission unit, given by the Polar Electro Heart Rate Monitor (HRM), developed by Polar Inc. for cardiac monitoring of athletes. The heart rate data are thus collected from the HRMI and retrieved by another patch here developed; a simple algorithm which is shown in Figure

3. The HRM is an equipment produced in large quantities, for thousands of individuals who practice cardiovascular activities, such as jogging. For this reason this equipment is affordable and reliable for our purposes. Figure 3 shows the patch developed to read data from the HRMI hardware.

Figure 3: Algorithm (patch) for retrieving real-time HRV data from HRMI hardware.

Systems similar to the one here presented allow the control of various parameters of compositional generation by involuntary gestures. For example, it would be possible to control an adaptive algorithm which extracts the notational evolution over time, based on parameters of musical descriptors. Such computer models can be extended to generate artificial soundscapes (landscape of sounds) through the control of evolutionary computation models, such as the evolutionary sound synthesis method described in (Manzolli, 2010). The cognitive characteristics of artificial soundscapes can be dynamically controlled by biosignal data, with the variation of the emotional state of short term (Affects) and long term (Moods) durations (Blechman, 1990). The next step for the computational model presented here might be to use data associated with involuntary changes in the emotional state, of short and long duration, in order to parameterize a computer model to generate interactive self-organizing virtual musical score independently for each musician. The aim is thus to develop a strategy for the feedback of the informational flow, thus enabling fulfill the implementation of this system, where the habit of this informational flow is the direct representation of the self-organization of musical meaning (Oliveira, 2011). With this, we have a dynamic generation process of music notation based on the interaction between composer and performer, mediated by technological apparatus of sensors of involuntary gestures (biosignals) that control the computational model by processing algorithms representing the computational model of musical generation.

Music, as an immaterial art of time, depends on the existence of registration methods to be registered and recreated again. Traditional western music notation is a robust solution to, up to an extent, register it. However, just like a road map is the representation of a road, not the actual road, formal music notation is a representation of music; not the real music, that is only manifested through the interaction between performance and listening. Notation represents the algorithmic steps (the recipe) for the generation of a performance of a compositional structure, which allows the musician to find his/her way through endless possibilities of sounds that a musical instrument is capable to offer. As it runs, this intermediating “musical algorithm” translates graphic objects of musical notation to gestures that correspond to sound objects of a performance. The traditional timing order of events that constitutes a musical creative process begins with the cognitive appraisal of structuring and ends with the evoked emotion of performing; a process mediated by musical listening. However, the technological resources of today’s computer music allow to reverse the natural order of formal music composition, which may be initiated by a musical process of free improvisation which leads the self-organization of a formal music notation structure, corresponding to a musical score, and thus closes a feedback informational loop, which might be expressed by the following analogy: a computational process in which “art imitates life that imitates art.”

Conclusions

The study presented here aims to lay the groundwork for the development of further methods of interactive music notation implemented in computational models. This is a system of algorithmic computer music that has the ability of systematically self-organize, in the form of a coherent structure of music notation, formed out of data collected in real time by music descriptors during a performance that is at first improvisational. Through the triadic interaction: computer-composer-performer, free improvisation is the starting element intervening into the system, represented by the computational process of automatic composition of music notation. This process can be extended by using one or more of the three elements further described, which are not mutually exclusive. They are: 1) Music descriptors, which are computer models that predict specific aspects of music, 2) Gestural interfaces, given by motion sensors, such as accelerometers, gyroscopes and spatial positioning detectors, and 3) Biosignals, corresponding to data collected by non-invasive sensors retrieving involuntary physiological reactions, associated with changes in the emotional state of individuals, in this case, the musicians during performance, or the listener in the audience. As said, the computational model developed here is open-source; implemented on PD (www.puredata.info). The visual display of the virtual score is rendered with INScore (www.inscore.sourceforge.net). The system generates dynamic structures of traditional music notation, representing musical works that emerge from the open system, whose regularities arise from the process of self-organization of the informational flow generated by data collected by musical descriptors and / or biosignals. In the model described here, data is dynamically collected by psychoacoustic descriptors. At the end of the process, a final structure is created by this dynamic musical process and registered, so it may be performed later, in a traditional fashion, by a similar group of musicians. The resulting music score, as such, is a product of the process. Each time the system runs, a new music score is produced. However, rather than being created by a single composer, this type of musical piece is the result of a process of distributed creation, emerging from the self-organized interaction between human and machine, and expressed in the dynamic interplay between composer, computer and performer, which arises the figure of the Meta-Composer; the composer of a compositional process.

In contrast to other forms of traditional music notation, this one is interactive and can be seen as a structured process of notation that is dynamic and adaptive. The development of this computational model took into account both aspects of music representation (acoustic and symbolic) controlled by improvisational gestures. The informational flow of evoked emotions, from music listening and performing, permeates three interdependent areas of music: Structure (composition), Appraisal (listening) and Performance (playing). From this compounding system, music derives from the interaction of three agents: Computer (algorithm), Composer (designer) and Performer (musician). Together they create a self-organizing process that emerges from the regularities of an open system, whose musical aspects of the informational flow are detected by the mind, where emerging habits (quasi regularities) link together to form the musical meaning. Traditionally, music notation structures remain unchanged, while being dynamically decoded by the performance and appraised by the listening. However, this notation is the end result of a previous selforganizing process that happened on another open system; the mental universe of the composer, later influenced by the performer and also by the listener. Altogether, they form an open and complex system, influenced by external agents, here referred as “inspirational” which outline the final shape of a musical composition. The work presented here aims to extend this self-organizing process of traditional composing, as already happens in other music styles, whose structure is not fixed, but dynamically variable, and able to change along the performance. Examples of such processes are found in the improvisations of popular music and jazz, in artistic happenings, free improvisations, and so forth. The interactive musical notation computer model presented here is inspired in those styles, but extend their possibilities in the range of musical creativity process that is not finished but remain dynamic during performance, allowing this model to continually replenish itself, therefore closing the feedback loop between performance and dynamic composition of music notation. A video showing a first test with such interactive notational computer model, can be watched at:http://youtu.be/p0_A93QIUk4.

References

BANERJEE, J. C. Gestalt Theory of Perception. In: Encyclopaedic Dictionary of Psychological Terms. M.D. Publications, p. 107-109. 1994.

BLECHMAN, E. A. Moods, Affect, and Emotions. Hillsdale, NJ: Lawrence Erlbaum, 1990.

BOGDANOV, D. From Low-Level to High-Level: Comparative Study of Music Similarity Measures. In: IEEE INTERNATIONAL SYMPOSIUM ON MULTIMEDIA, 11., Kos, Grecia, 2009. Anais... Kos: IEEE, 2009.

CHUANG, J. Mozart’s Musikalisches Würfelspiel. A Musical Dice Game for Composing a Minuet. Disponível em: <http://sunsite.univie.ac.at/Mozart/dice>. Acessado em: 01 set 2012.

FORNARI, Jose; Path of Patches: Implementing an Evolutionary Soundscape Art Installation. EUROPEAN EVENT ON EVOLUTIONARY AND BIOLOGICALLY INSPIRED MUSIC, SOUND, ART AND DESIGN, 9., Torino, ITALIA, Vol. 1, p. 1-10, 2011. Anais... Idem, 2011.

HELMHOLTZ, H. On the Sensations of Tone as a Physiological Basis for the Theory of Music. 4. ed. Londres: Longman Green, 1912.

JAMES, William. The Principles of Psychology. New York: H. Holt, 1893. p. 609.

KAUFFMAN, Stuart A. The Origins of Order: self-organization and selection in evolution. Oxford University Press, 1993.

KIM, K. H., Bang, S. W., Kim S. R. Emotion Recognition System Using Short-Term Monitoring of Physiological Signals. Medical and Biological Engineering Computation, vol. 42, n. 3, p. 419-427, 2004.

KIM, J. H. and Uwe S. Embodiment and Agency: Towards an Aesthetics of Interactive Performativity. SOUND AND MUSIC COMPUTING CONFERENCE, 4., 11-13 July 2007, Lefkada, Grécia. Anais... Idem, 2007.

MANZOLLI, J., SHELLARD, M., FORNARI, J. O Mapeamento Sinestésico do Gesto Artístico em Objeto Sonoro. Revista OPUS, vol. 14, p. 407-427, 2010.

MEYER, L. Emotion and Meaning in Music. Londres: University of Chigado Press, 1956.

MENZIES, T. Applications of Abduction: knowledge level modeling. International Journal of Human Computer Studies, p. 305-355, 1996.

MOORE, Gordon E. Cramming More Components Onto Integrated Circuits. Electronics Magazine. p. 4. 1965.

MOORE, E., SULSZMAN, M., FRANK M., FULLER, C. A. The Clocks that Time Us: physiology of the circadian timing system. Cambridge, MA: Harvard University Press, 1982.

OLIVEIRA, L. F., FORNARI, J. SHELLARD, M., MANZOLLI, J. Abdução e Significado em Paisagens Sonoras: um estudo de caso sobre a instalação artística repartitura. Revista Kinesis, Vol. 3, p. 43-67, Porto Alegre, RS, Brasil, 2010.

PEIRCE, C. S. The Collected Papers of Charles S. Peirce. Cambridge: Harvard University Press, 1931.

SLOBODA, J. A. The Musical Mind: the cognitive psychology of music. Oxford: Oxford University Press, 1985. 268p.

TRIESCH, J. Automatic Face and Gesture Recognition. IEEE INTERNATIONAL CONFERENCE, 3., Anchorage, Alasca (EUA), 1998.

VAIDYA P. G.; Rong, H. Using Mutual Feedback Chaotic Synchronous Systems. Tsinghua Science and Technology, Vol. 2, n. 3, p. 687-693, 1997.

WRIGHT, O. The Modal System of Arab and Persian Music AD 1250-1300. Oxford, ING: Oxford University Press, 1978.

XENAKIS, Iannis, Formalized Music: thought and mathematics in music. Indiana: Indiana University Press, 1971.

José Fornari - Desde 2008, pesquisador, carreira PQ, no NICS (Núcleo Interdisciplinar de Comunicação Sonora) da UNICAMP. Realizou um Pos-doutorado (2007) em Cognição Musical, no grupo MMT (Music and Mind Tecnhology) da Universidade de Jyvaskyla, Finlândia, e outro Pos-doutorado (2004) em Síntese Sonora Evolutiva, com bolsa da FAPESP. É Mestre (1994) e Doutor (2003) pela FEEC / UNICAMP. Tem bacharelado em Música Popular (1993) pelo Departamento de Música do IA / UNICAMP e Engenharia Elétrica (1991) pela FEEC / UNICAMP.